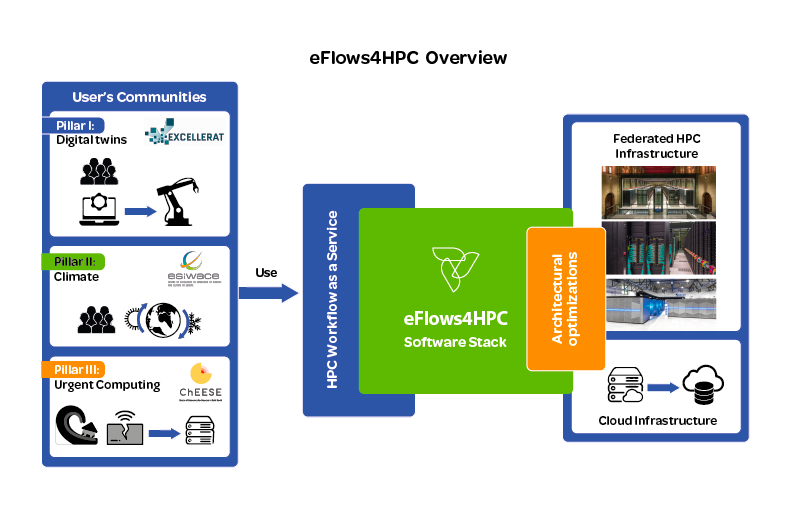

Today, developers lack tools that enable the development of complex workflows involving HPC simulation and modelling with data analytics (DA) and machine learning (ML). The eFlows4HPC project aims to deliver a work-flow software stack and an additional set of services to enable the integration of HPC simulation and modelling with big data analytics and machine learning in scientific and industrial applications.

The software stack will allow to develop innovative adaptive workflows that efficiently use the computing resources and also innovative data management solutions. In order to attract first-time users of HPC systems, the project will provide HPC Workflows as a Service (HPCWaaS), an environment for sharing, reusing, deploying and executing existing workflows on HPC systems. The project will leverage workflow technologies, machine learning and big data libraries from previous open-source European initiatives. Specific optimizations of application kernels tackling the use of accelerators (FPGAs, GPUs) and the European Processor Initiative (EPI) will be performed. To demonstrate the workflow software stack, use cases from three thematic pillars have been selected.

Pillar I focuses on the construction of DigitalTwins for the design of complex manufactured objects integrating state-of-the-art adaptive solvers with machine learning and data-mining, contributing to the Industry 4.0 vision. Pillar II develops innovative adaptive workflows for climate and the study of Tropical Cyclones (TC) in the context of the CMIP6 experiment. Pillar III explores the modelling of natural catastrophes – in particular, earth-quakes and their associated tsunamis shortly after such an event is recorded. Leveraging two existing workflows, Pillar III will work on integrating them with the eFlows4HPC soft-ware stack and on producing policies for urgent access to supercomputers. The results from the three Pillars will be demonstrated to the target community CoEs to foster adoption and receive feedback.